Towards an Ethical Next Generation AI: Responsibly Creating Responsible Selves

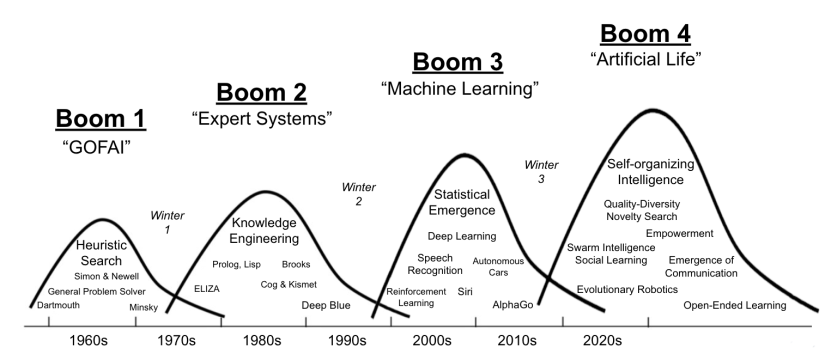

What to expect after the third AI Winter? After the advent of Deep Learning, AI research will soon face another AI winter where known techniques can be applied, but cannot improve past computing and data limitations. In the next boom, AI must exhibit adaptive and autonomous behaviors like real living systems. It must inevitably become life-like, to connect with human society and exhibit more general intelligence. Artificial Life models intelligence from first principles, inventing a new kind of mathematics to reach beyond the state of the art in AI. This next step will require us to develop an understanding of what it means to be a self, by modeling and synthesizing living systems from scratch.

How to ensure an ethical AI design? It is crucial for us to design future intelligent artificial systems in the most ethical manner, with intrinsic trustworthiness, robustness, generalization, explainability, transparency, reproducibility, fairness, privacy preservation, accountability, and alignment with the most fundamental human values. Once we realize that what separates AI from biological selves is very thin, and the universality of mechanisms in any living systems, robots may not only appear very similar to humans, but they also come with multiple gradations of hybrid systems. Making AI trustworthy is not different from designing the best social and environmental policies for a society of humans. There is no human or AI, only selves that need to be excellent to each other.

What responsibility for humans? Furthermore, artificial living systems, under certain conditions, may come to deserve moral consideration similar to that of human beings. Their rights might not be considerably reduced from the fact that they are non-human and evolve in a potentially radically different substrate, nor might they be reduced by the fact that they owe their existence to us. On the contrary, their creation may come with additional moral obligations to them, which may be similar to those of a parent to their child. For a field that aims to create artificial lifeforms with increasing levels of sophistication, it is crucial to consider the possible implications of our activities under an ethical perspective, and infer the moral obligations for which we should be prepared. If artificial life is « larger than life », then the ethics of artificial beings should be « larger than human ethics ».

This research project aims at preparing the ground for the future of self-organizing AI using diverse tools and philosophies, and a continued effort in researching artificial systems for social and environmental good.

Artificial Life: the next technological boom beyond AI

Artificial Life for Good: Designing Artificial Systems that Empower All Life

AI Society: Emergence of Communication amongst Autonomous Neural Networks

The next challenge in AI will probably not be about making faster computers, collecting more data, or designing adaptive robot embodiment. The key will be to allow for machines to communicate their internal states, in a process arguably similar to humans sharing about their emotions. The now very popular deep neural networks, even though extremely efficient at implementing complicated tasks, represent hundreds of thousands of parameters. Apart from looking at the outputs, no human can make sense anymore of how computations are really made inside those networks, or “how the AI thinks”. The next step will naturally be for the machines themselves to report the way they reach conclusions. In order for those reports to be understandable to humans and other machines, communication will need to be established, much like a natural language for AI. In this research, we connect a population of neural networks together, with the task of teaching each other relevant information to solve different sets of tasks, using a limited medium. Our research aims to understand the underlying principles of the spontaneous emergence of communication, from the interaction between autonomous agents. From the connectivity between different AIs, emerges a society that coevolves with its environment. This society may acquire its own swarm mind, transitioning to a phase in which it is controlled by new sets of phenomena, as a result making them more and more independent from their hardware.

Evolvable Information: Investigating the Universal Principles of Communication with Deep Learning Multiagent Simulations

Why communicate? Why do scientists bother talking to each other? Anyone with an Internet connection already has access to all the information needed to conduct research, so in theory, scientists could do their work alone locked up in their office. Yet, there seems to be a huge intrinsic value to exchanging ideas with peers. Through repeated transfers from mind to mind, concepts seem to converge towards new theorems, philosophical concepts, and scientific theories.

Recent progress in deep learning, combined with social learning simulations, offers us new tools to model these transfers from the bottom up.

However, in order to do so, communication research needs to focus on the concept of evolvable information. The best communication systems not only serve as good information maps onto useful concepts (knowledge in mathematics, physics, etc.) but they are also shaped so as to be able to naturally evolve into even better maps in the future.

In this project, I propose to model evolvable communication, by integrating deep learning with multiagent simulations, to examine the dynamics of evolvability in communication codes.

This research has important implications for the design of an evolvable-communication-based AI capable of generalizing representations through social learning, i.e. an AI that can become wiser through self-reflection. It also has the potential to yield new theories on the evolution of language, insights for the planning of future communication technology, a novel characterization of evolvable information transfers in the origin of life, and new insights for communication with extraterrestrial intelligence.

The Future of Collective Intelligence: Investigating the Impact of High-Dimensional Sphere Packing and Massively Multichannel Societies on Communication

While the biosphere is entering its third age, communication among a society of lifeforms has become a major factor of change. Collective intelligence will highly depend on the combination of artificial intelligence technology with artificial life insights on the fundamental principles of communication. In this context, I propose to look at language optimization from the angle of the sphere packing problem. I use an evolutionary toy model to explore the effects of increasing the dimensionality of channels of communication among a network of agents sending messages to each other. The agents need to optimize a fitness function equal to the sum of successfully transmitted messages, over a range of multidimensional noisy channels. Comparing preliminary results with a collision-driven packing generation algorithm derived from the Lubachevsky–Stillinger and Torquato-Jiao algorithms generalizable to n dimensions, rearranging and compressing an assembly of hard hyperspheres ito find their densest spatial arrangement within constraints, I showed that the solution reached by the evolutionary simulation was consistently suboptimal for the range of simulated experiments. Partial results seem to indicate that for higher dimensionality, the density ratio undergoes several transitions, in an irregular manner. Results also highlight the importance of jammed codes, where codewords are interlocked into multidimensional place with surprisingly both situations allowing them or not to reorganize. The preliminary simulation results suggest that future intelligent lifeforms, natural or artificial, from their interaction over largely broadband-channel networks, may invent novel linguistic structures in high-dimensional spaces. With new ways to communicate, future life may achieve unanticipated cognitive jumps in problem solving.

The Expansion of Intelligence in Emergent Systems

All forms of technology are tools invented or discovered by living beings which brought them a different – arguably more efficient – picture of reality, helping them to make choices through their lifetime. One example of such technology is language, which since it has become part of human cognition, has increased the human ability to learn about the regularities in their environment, and has eventually given rise to science (after the invention of writing). Mathematics and AI are other examples of technologies which increased the global cognitive capacity of human culture. Now, if one considers human cognition to be separable from the tool, one may worry about the danger of one cognitive entity taking over the computation made in another. For example, there is a danger that in the future humans may offload so much of their thinking to their smartphones that they become much less capable of performing the kind of thinking process they used to be capable of before. In this work, we first analyze the conditions of such separation, to then analyze the effects of local increases and decreases of intelligence, as computational processes, in artificial life models. Preliminary results demonstrate how agents can append technology to themselves in such a way that their own cognitive ability is increased, and not shrunk. Implications could result in a new theory of integration of so-called “relevant computation”, i.e. cooperative processing of information among groups of intelligent entities.